The rise of 400G in the data center

Author

administrator

Date

2019-01-23 09:56

Views

6536

The rise of 400G in the data center

July 3, 2018

By Niall Robinson, ADVA Optical Networking

The transition to 400 Gbps is rapidly changing how data centers and data center interconnect (DCI) networks are designed and built. But how will the landscape look when the battles between competing technologies in each area have been settled?

The move to 400-Gbps transceivers in the data center can be viewed as a game of two halves and it’s going to be interesting to see how things pan out in each: the client and the networking side. Right now, two competing multi-source agreements (MSAs) are battling it out in the plug space, both vying to be the form factor of the 400-Gbps era. It’s all about quad small form factor pluggable double density (QSFP-DD) optical transceivers vs. octal small form factor pluggable (OSFP) optical transceivers (see Figure 1).

Let the battle begin

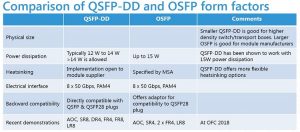

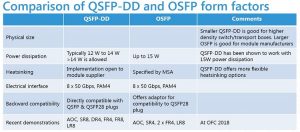

Of the two sides – client and networking – it’s a little easier to predict how things will go from the client point of view. That’s because even the most high-performance client interface can fit into either of the two physical form factors. For that reason, QSFP-DD looks to be moving into the lead with various reach options coming to market. The table below compares some of the key parameters between the two form factors, with many client reach options demonstrated live in San Diego at the recent Optical Fiber Communications (OFC) conference:

Driving the network side is the emergence of the Optical Internetworking Forum’s 400ZR DWDM coherent interface specification and the trend towards combining transmission and switching in one box. As always, the choice of form factor comes down to mechanics and power. The OSFP is a bigger module, providing lots of useful physical space for DWDM components. It also features heat dissipation capabilities through its own thermal management system, enabling up to 15 W of power. When you’re putting coherent capabilities into a very small form factor, power is key and so, in some respects, OSFP does have a significant advantage. Its Achilles’ heel, though, is that it’s a much longer device, thus consuming more board space; its power benefits aren’t especially necessary on the client side, either.

Also, for all OSFP’s space, power, and enhanced signal integrity performance, it doesn’t offer direct backwards compatibility to QSFP28 plugs. As a technology, it’s a blank slate with no 100-Gbps version, and so it can’t provide an easy transition from legacy modules. This is another reason why it hasn’t been universally accepted on the client side.

The QSFP-DD, on the other hand, does have direct backwards compatibility with QSFP28 and QSFP plugs and that’s the key to the enduring popularity of this form factor. It means operators are able to take a QSFP-DD port and install a QSFP28 plug. The value of this convenience can’t be underestimated. After all, it was this handy interoperability with legacy technology that helped the QSFP28 win out in the 100-Gbps race as it made it possible to slot a 40-Gbps QSFP+ plug straight in.

There’s a lot of support for QSFP-DD with its small form factor. The downside, of course, is its lower power dissipation. It typically allows up to 12 W, making it much tougher to handle a coherent ASIC and keep it sufficiently cool. If QSFP-DD can be made to work, and there are many suppliers working on such products today, it looks like it would have the momentum to kill off OSFP, but we’re certainly not there yet.

Applications for 400 Gbps

In the switching architecture on the client side, there’s an enormous ecosystem push towards 400 Gbps. A significant element here is white box switching, which has become a viable option for more operators due to the rise of software-defined networking (SDN) and production network offerings driven by the Open Compute Project (OCP).

Many companies are now contributing to the OCP. However, Edgecore Networks was the first to contribute the design of a 400 Gigabit Ethernet data center switch. Edgecore’s latest 1RU form factor switch provides 32 QSFP-DD ports, each capable of 400-Gbps operation. The move highlights the belief among the OCP community that 400-Gbps Ethernet switching will become crucial in the next couple of years.

So how about 400 Gbps in DCI transport? Well, there are multiple approaches to introducing the new speed on this side, but the key issue is transmission distance. While 400ZR plugs are fine for campus and metro distances, they start to run out of capability over longer reaches. Stretched across 100 km, they can easily deliver all 400 Gbps of capacity. But when links get to a few hundred kilometers, the plug itself would have to operate at a lower data rate, turning the switch port into a 200-Gbps port or even 100 Gbps for long-haul.

This is where emerging DCI technologies that are specifically developed for hyperscale applications come into play. There are several different approaches coming to market now, all aimed at maximizing throughput. These include harnessing probabilistic constellation shaping, for example, as well as 64QAM technology, which the likes of Nokia and Acacia are using to push the boundaries of spectral efficiency. These advanced technologies require highly sophisticated ASICs, which consume much more power and hence dissipate more heat than can be tolerated in a QSFP-DD or OSFP plug.

To deliver these advanced transmission capabilities there are terminal platforms under development that will enable 400 Gbps in DCI networks by offering even higher capacities and speeds. Systems capable of transporting 600 Gbps of data over a single wavelength and delivering a total duplex capacity of 3.6 Tbps of transmission capacity in 1RU have appeared at industry events such as OFC (see Figure 2). The advantage of this kind of platform is that it delivers more capacity at every distance, including the shorter campus and metro ranges where it provides 600 Gbps per wavelength – albeit with some added initial cost due to extra shelf space. The real benefit, though, comes when used with regional or long-haul links, where this type of terminal stays at a higher data rate for longer distances.

The emergence of 400 Gbps is reshaping the data center and DCI optical landscape. The first half of this game is being played out right now on the client side. Perhaps QSFP-DD is in the lead but the second half of the game, on the line side, could well tilt the playing field in favor of OSFP.

Early discussions on what’s feasible for the next Ethernet rate, after 400G, have already begun. So it’s likely that two or three years from now many vendors will be discussing the next generation of pluggable form factors for both client and line side products, evolving the data center and DCI market once again.

Niall Robinson is vice president, global business development at ADVA Optical Networking. He has more than 26 years in the telecommunications industry. A recognized expert on data center interconnect technology and frequently requested speaker at conferences all over the world, Niall’s specialty is moving cutting-edge technology from the lab into the network.

July 3, 2018

By Niall Robinson, ADVA Optical Networking

The transition to 400 Gbps is rapidly changing how data centers and data center interconnect (DCI) networks are designed and built. But how will the landscape look when the battles between competing technologies in each area have been settled?

The move to 400-Gbps transceivers in the data center can be viewed as a game of two halves and it’s going to be interesting to see how things pan out in each: the client and the networking side. Right now, two competing multi-source agreements (MSAs) are battling it out in the plug space, both vying to be the form factor of the 400-Gbps era. It’s all about quad small form factor pluggable double density (QSFP-DD) optical transceivers vs. octal small form factor pluggable (OSFP) optical transceivers (see Figure 1).

Figure 1. Two transceiver form factors, the QSFP-DD (left) and OSFP (right), are being touted for support of 400-Gbps transmission.Each has advantages – and drawbacks.

Figure 1. Two transceiver form factors, the QSFP-DD (left) and OSFP (right), are being touted for support of 400-Gbps transmission.Each has advantages – and drawbacks.

Let the battle begin

Of the two sides – client and networking – it’s a little easier to predict how things will go from the client point of view. That’s because even the most high-performance client interface can fit into either of the two physical form factors. For that reason, QSFP-DD looks to be moving into the lead with various reach options coming to market. The table below compares some of the key parameters between the two form factors, with many client reach options demonstrated live in San Diego at the recent Optical Fiber Communications (OFC) conference:

Driving the network side is the emergence of the Optical Internetworking Forum’s 400ZR DWDM coherent interface specification and the trend towards combining transmission and switching in one box. As always, the choice of form factor comes down to mechanics and power. The OSFP is a bigger module, providing lots of useful physical space for DWDM components. It also features heat dissipation capabilities through its own thermal management system, enabling up to 15 W of power. When you’re putting coherent capabilities into a very small form factor, power is key and so, in some respects, OSFP does have a significant advantage. Its Achilles’ heel, though, is that it’s a much longer device, thus consuming more board space; its power benefits aren’t especially necessary on the client side, either.

Also, for all OSFP’s space, power, and enhanced signal integrity performance, it doesn’t offer direct backwards compatibility to QSFP28 plugs. As a technology, it’s a blank slate with no 100-Gbps version, and so it can’t provide an easy transition from legacy modules. This is another reason why it hasn’t been universally accepted on the client side.

The QSFP-DD, on the other hand, does have direct backwards compatibility with QSFP28 and QSFP plugs and that’s the key to the enduring popularity of this form factor. It means operators are able to take a QSFP-DD port and install a QSFP28 plug. The value of this convenience can’t be underestimated. After all, it was this handy interoperability with legacy technology that helped the QSFP28 win out in the 100-Gbps race as it made it possible to slot a 40-Gbps QSFP+ plug straight in.

There’s a lot of support for QSFP-DD with its small form factor. The downside, of course, is its lower power dissipation. It typically allows up to 12 W, making it much tougher to handle a coherent ASIC and keep it sufficiently cool. If QSFP-DD can be made to work, and there are many suppliers working on such products today, it looks like it would have the momentum to kill off OSFP, but we’re certainly not there yet.

Applications for 400 Gbps

In the switching architecture on the client side, there’s an enormous ecosystem push towards 400 Gbps. A significant element here is white box switching, which has become a viable option for more operators due to the rise of software-defined networking (SDN) and production network offerings driven by the Open Compute Project (OCP).

Many companies are now contributing to the OCP. However, Edgecore Networks was the first to contribute the design of a 400 Gigabit Ethernet data center switch. Edgecore’s latest 1RU form factor switch provides 32 QSFP-DD ports, each capable of 400-Gbps operation. The move highlights the belief among the OCP community that 400-Gbps Ethernet switching will become crucial in the next couple of years.

So how about 400 Gbps in DCI transport? Well, there are multiple approaches to introducing the new speed on this side, but the key issue is transmission distance. While 400ZR plugs are fine for campus and metro distances, they start to run out of capability over longer reaches. Stretched across 100 km, they can easily deliver all 400 Gbps of capacity. But when links get to a few hundred kilometers, the plug itself would have to operate at a lower data rate, turning the switch port into a 200-Gbps port or even 100 Gbps for long-haul.

This is where emerging DCI technologies that are specifically developed for hyperscale applications come into play. There are several different approaches coming to market now, all aimed at maximizing throughput. These include harnessing probabilistic constellation shaping, for example, as well as 64QAM technology, which the likes of Nokia and Acacia are using to push the boundaries of spectral efficiency. These advanced technologies require highly sophisticated ASICs, which consume much more power and hence dissipate more heat than can be tolerated in a QSFP-DD or OSFP plug.

To deliver these advanced transmission capabilities there are terminal platforms under development that will enable 400 Gbps in DCI networks by offering even higher capacities and speeds. Systems capable of transporting 600 Gbps of data over a single wavelength and delivering a total duplex capacity of 3.6 Tbps of transmission capacity in 1RU have appeared at industry events such as OFC (see Figure 2). The advantage of this kind of platform is that it delivers more capacity at every distance, including the shorter campus and metro ranges where it provides 600 Gbps per wavelength – albeit with some added initial cost due to extra shelf space. The real benefit, though, comes when used with regional or long-haul links, where this type of terminal stays at a higher data rate for longer distances.

Figure 2. A new generation of DCI platforms capable of 600-Gbps transmission should advance the use of 400 Gbps in a wider range of applications.

The emergence of 400 Gbps is reshaping the data center and DCI optical landscape. The first half of this game is being played out right now on the client side. Perhaps QSFP-DD is in the lead but the second half of the game, on the line side, could well tilt the playing field in favor of OSFP.

Early discussions on what’s feasible for the next Ethernet rate, after 400G, have already begun. So it’s likely that two or three years from now many vendors will be discussing the next generation of pluggable form factors for both client and line side products, evolving the data center and DCI market once again.

Niall Robinson is vice president, global business development at ADVA Optical Networking. He has more than 26 years in the telecommunications industry. A recognized expert on data center interconnect technology and frequently requested speaker at conferences all over the world, Niall’s specialty is moving cutting-edge technology from the lab into the network.